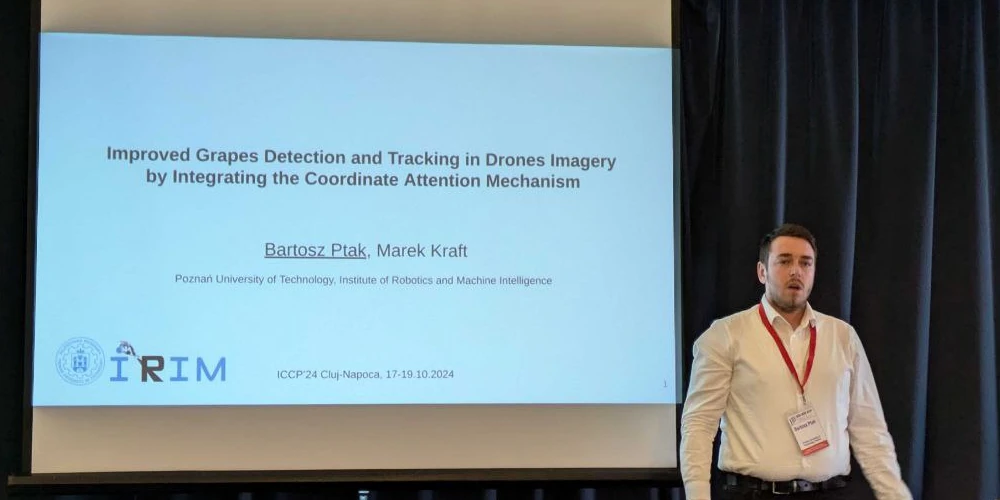

IEEE ICCP Conference 2024 - Cluj-Napoca, Romania - Our contribution

On October 17-19 Przemyslaw and Bartosz participated in 20th International Conference on Intelligent Computer Communication and Processing (ICCP 2024) in Cluj-Napoca, Romania. Apart from listening to interesting lectures, Bartosz and Przemyslaw presented their work, which will be published in the Conference proceedings. Bartosz presented his work on “Improved Grapes Detection and Tracking in Drones Imagery by Integrating the Coordinate Attention Mechanism”, and Przemysław presented his paper on “Streamlining Crop Segmentation with Multispectral Imaging and Foundation Models: Minimizing Manual Annotation”.

The four days were filled not only with scientific activities but also with sighting and local cuisine!

Improved Grapes Detection and Tracking in Drones Imagery by Integrating the Coordinate Attention Mechanism

Recent advancements in deep learning algorithms have revolutionised computer vision, significantly impacting precision agriculture and farming automation. Object detection and tracking techniques, supported by drones equipped with vision sensors, play pivotal roles in monitoring and enhancing agricultural processes. This study focuses on improving object detection for precision viticulture, addressing challenges such as the small size of grape bunches and drone-induced distortions. By integrating the Coordinate Attention mechanism into the GELAN network, we enhance the detection of grapes in high-resolution images. Additionally, the study evaluates three methods for stabilising grape tracking, with ORB feature matching proving superior. Overall, these advancements contribute to automated crop monitoring and increased agricultural productivity through robust computer vision techniques. The findings underscore the significance of tailored approaches in adapting object detection technologies to specific precision agriculture applications.

Streamlining Crop Segmentation with Multispectral Imaging and Foundation Models: Minimizing Manual Annotation

Deep learning advancements have significantly enhanced computer vision applications in precision agriculture. While RGB cameras operating in visible light are affordable, they provide limited information compared to multispectral equipment. This research analyses methods to reduce the need for manual annotation when training a model using only RGB images, without compromising the model’s accuracy. We propose a semi-supervised approach where a teacher model, trained on multispectral images, generates artificial ground truth data to train a student model that operates solely on RGB images. This strategy has enabled us to achieve nearly a tenfold reduction in the required training data while maintaining similar performance metrics. Additionally, we explore the potential of segmentation foundation models to simplify the manual annotation process, reducing the need for full segmentation masks to just bounding boxes. Our findings also indicate that using multispectral images as input for the Segment Anything Model is more effective than using RGB images.

Comments