PUT-ISS: Our robotics experiment on the ISS

We are thrilled to announce our project, PUT-ISS!

As part of the LeopardISS project, we are set to launch our robotic experiment on the International Space Station (ISS) in collaboration with KP Labs.

The LeopardISS project, developed by KP Labs, is set to launch with the IGNIS mission, with the participation of the Polish astronaut Sławosz Uznański-Wiśniewski. It aims to validate AI algorithms in orbit and will feature presentations on cutting-edge space research and Poland’s growing role in the industry.

Our mission and contribution

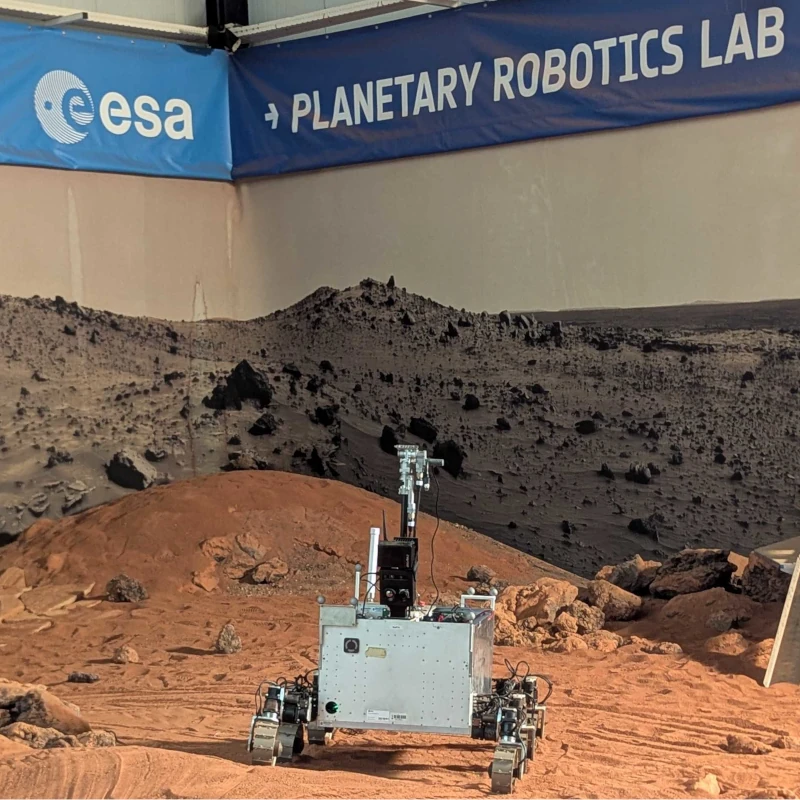

Our project aims to develop algorithms for stereo vision and 3D mapping in a real-space environment. It is tailored for autonomous lunar and planetary rover missions and focuses primarily on lunar-like environments, enabling the rover to move autonomously in the future. Our contribution is not only limited to algorithms development but also includes their optimisation and deployment on the space-proven hardware delivered by our partner.

The system is intended to advance the space robotics field and validate the potential of computer vision and autonomous applications for future planetary exploration. Additionally, it demonstrates the ability to deploy a Robotic Operating System on resource-limited, ready-to-fly space hardware.

Computation resources

LeopardISS will be hosted in the ICE Cubes Facility, operated by the ICE Cubes Service, within the ISS Columbus module, enabling real-time communication. This ensures the KP Labs team can monitor and manage operations seamlessly from Earth.

An integral part of our algorithms is their deployment on the LeopardISS platform. The platform is a cutting-edge Data Processing Unit (DPU) with energy-efficient processing cores. However, despite its high-performance processing capabilities, dedicated algorithms optimisation is required to handle space hardware. Considering the platform possibilities and limitations, the PUT-ISS ecosystem has been developed to ensure full operation and safety requirements.

Visual data

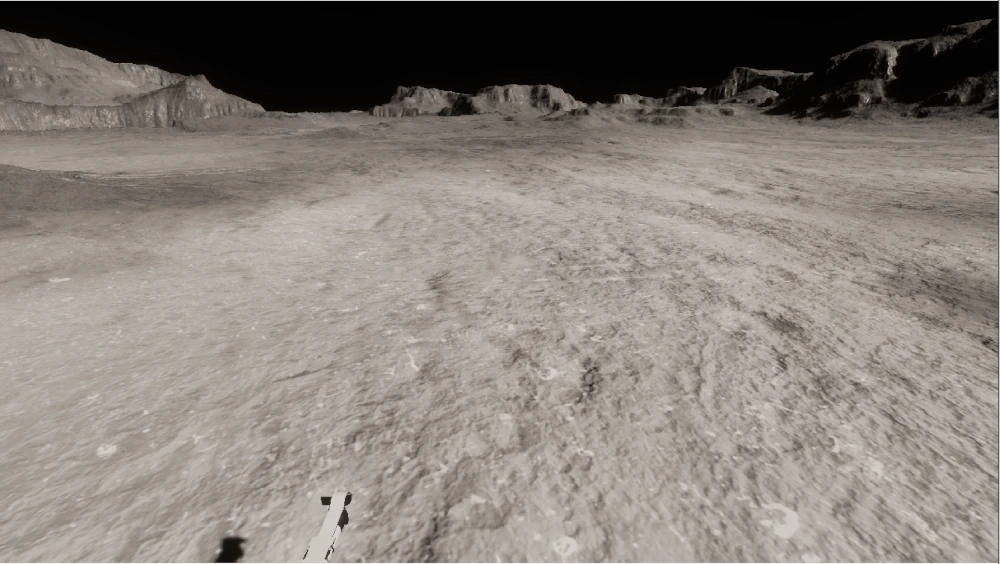

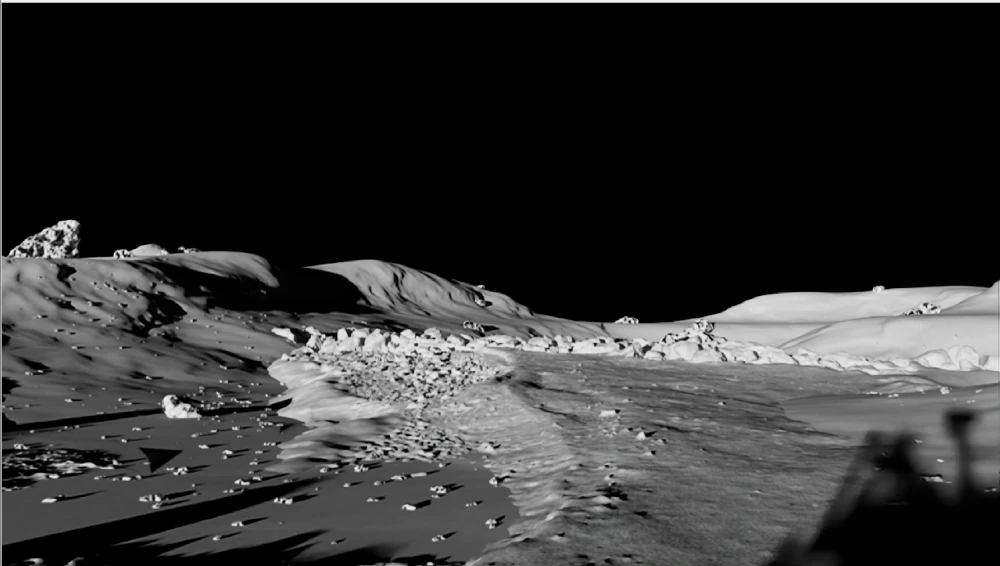

Robotic planet exploration is in a very early stage. This involves data limitations or even a complete lack of robotic data that meets modern robotic requirements. Therefore, to validate our algorithms, we utilised datasets that imitate the Moon’s environment visually. One of them was collected in the Sahara Desert in Morocco, providing real-world imagery. Two other datasets were designed and generated using game engines, providing a wide range of synthetic recordings.

- An example image from the Morocco dataset:

- An example frame generated in the LunarSim environment:

- An example frame rendered with the IsaacSim simulator:

Processing

As mentioned before, our system aims to generate a 3D environment map of the robot’s surroundings. The map not only allows for improved robot teleoperation but also enables autonomous rover movement to the base while communication fails. Based on stereovision cameras, the system builds a three-dimensional map, allowing terrain elevation estimation and obstacle avoidance. The whole development pipeline has a node-based structure established on a Robot Operating System (ROS). Its elements are explained below.

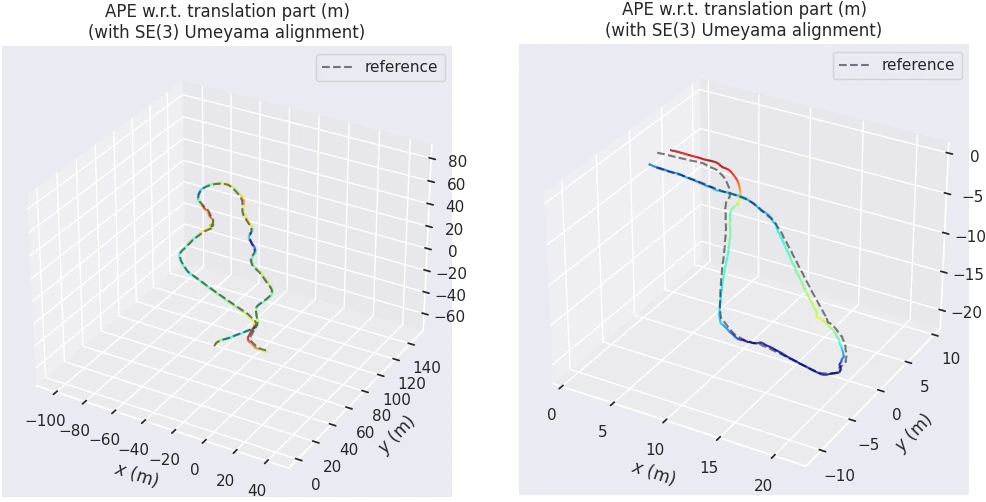

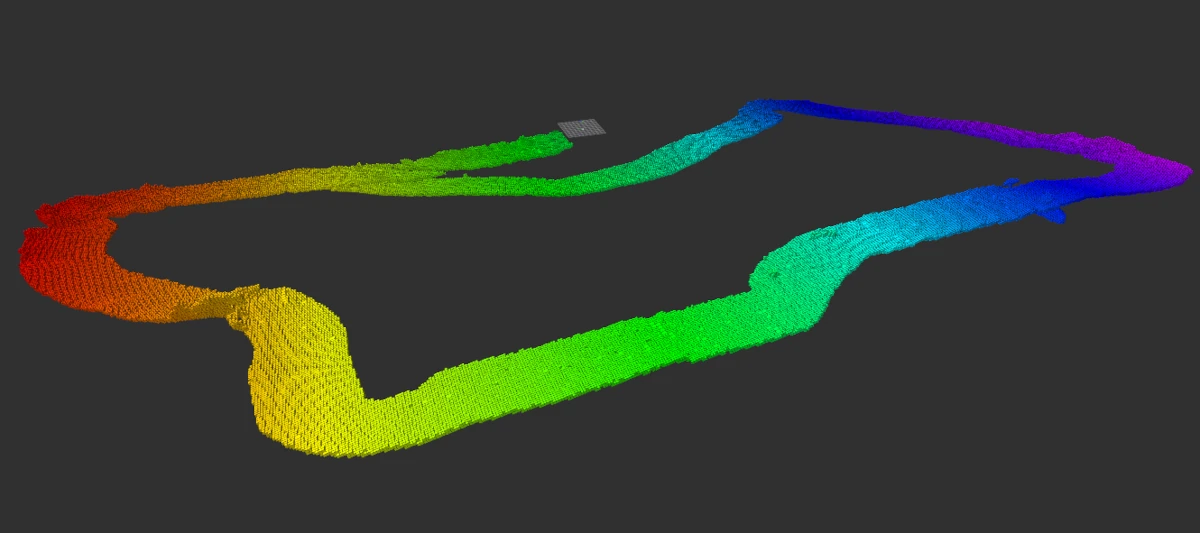

Trajectory estimation

The first step of the pipeline is an algorithm that generates the robot trajectory. Based on visual odometry, it generates rover movement between frames, estimating the actual rover position. Accurate and fast movement estimation plays a key role in advanced map estimation. The plots above present example trajectories - the grey ground truth and error-based colour estimation.

Point cloud generation

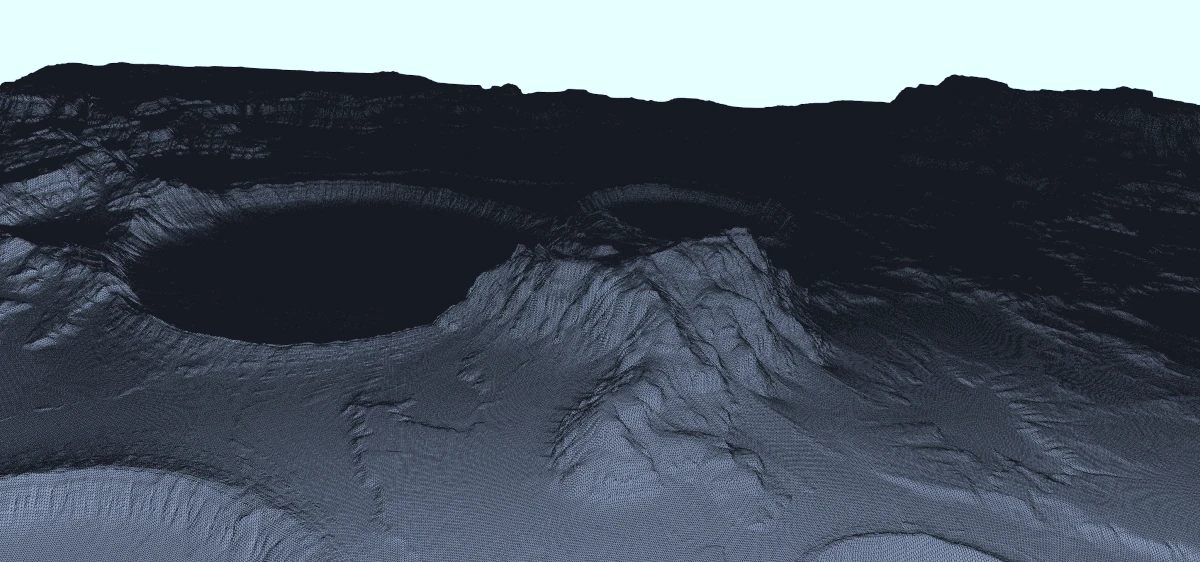

Along with trajectory estimation, the point cloud generation algorithm operates. It uses a pair of synchronised images to calculate the disparity, which represents the feature position difference between the two images. By triangulating the position of these matching pixels and considering the baseline (the distance between the two cameras), the algorithm reconstructs the 3D coordinates of the observed scene, generating a 3D map of local image features. An example point cloud is shown above.

Terrain 3D mapping

In this step, an algorithm combines the estimated position and local point cloud to generate a global environment map. The OctoMap occupancy grid mapping approach is employed, fulfilling the limitations of computation and memory. The algorithm works iteratively, operating on recent data provided by previous nodes. An example of map building is presented in the video below:

Final results and statistical analysis

The image above presents a final output map for one of the prepared datasets. Three experiments were prepared in total, enabling evaluation in different lunar-like environments. In the final step of each experiment, the physical analysis is performed to validate the operation in a real-space environment.

Comments